ETH Zurich's wheeled

By subscribing, you agree to our Terms of Use and Policies You may unsubscribe at any time.

ETH Zurich researchers have developed a locomotor control that can enable wheeled-legged robots to autonomously navigate various urban environments.

The robot was equipped with sophisticated navigational abilities thanks to a combination of machine learning algorithms. It was tested in the cities of Seville, Spain, and Zurich, Switzerland.

With little assistance from humans, the team’s ANYmal wheeled-legged robot accomplished autonomous operations in urban settings at the kilometer scale.

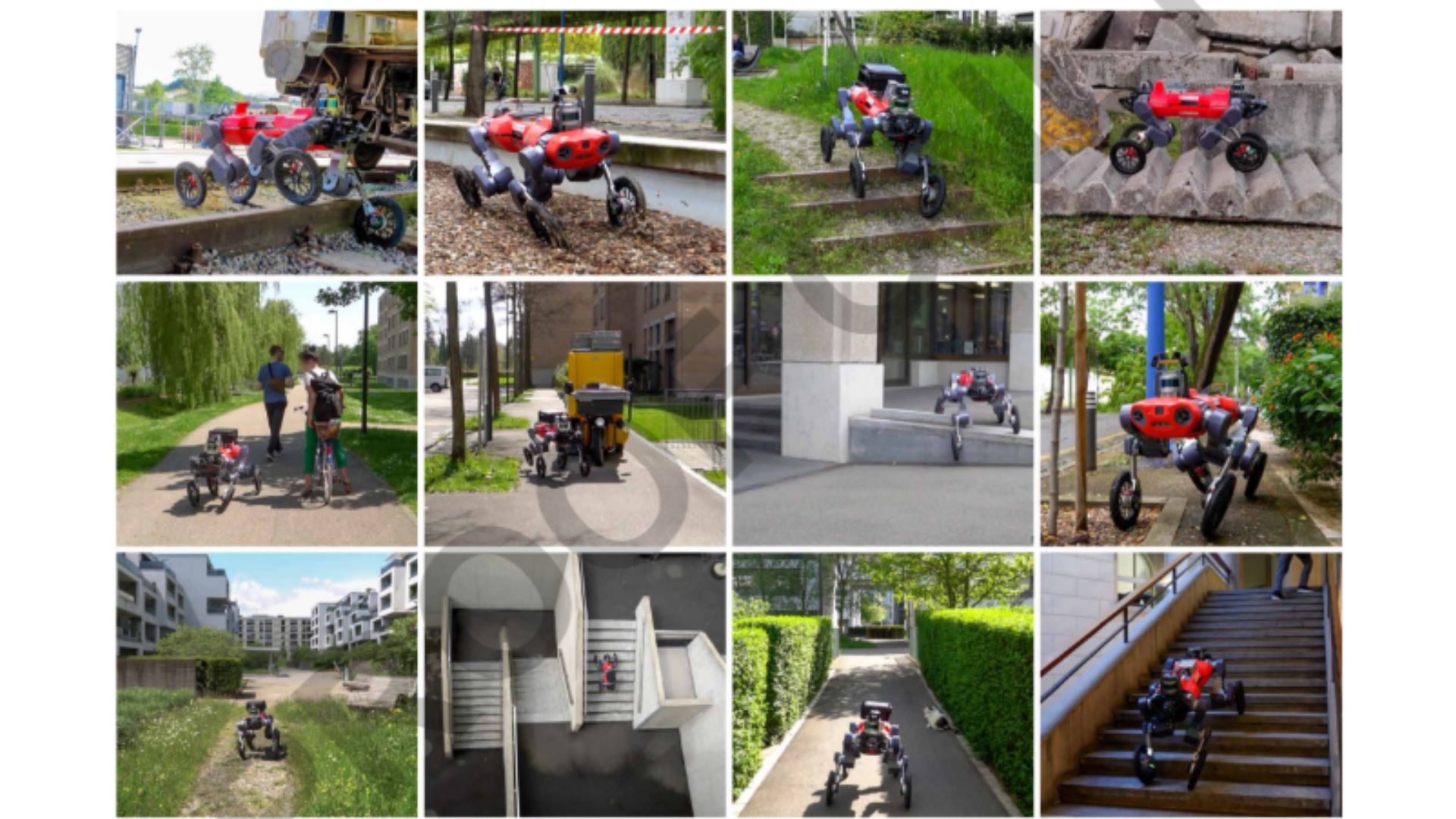

Featured Video RelatedIt made its way past a variety of obstacles, including pedestrians, uneven steps, stairs, and natural terrain.

The team claims the autonomous navigation system helped the robot navigate new areas, recall previous locations to assist in making decisions in real-time, and respond quickly to changing circumstances, such as pedestrian obstructions.

Robust urban navigation achieved

The ETH Zurich team engineered a sophisticated autonomous navigation system for wheeled-legged robots, seamlessly integrating navigation and locomotion controls.

Its method combined hybrid locomotion control, developed through model-free reinforcement learning (RL) and privileged learning, with a navigation controller optimized via hierarchical RL (HRL).

According to the team, the training involved simulated data, with controllers then integrated into a global navigation system using digital-twin models and high-resolution laser scans for experiment planning and onboard localization.

“Current work on wheel-legged platforms has gone beyond bioinspiration, but simultaneously opens new questions on how inspiration from animals can further improve human-made systems,” said Krzysztof Walas from Politechnika Poznanska in a statement.

According to researchers, its controllers facilitated adaptive gait selection, efficient terrain negotiation, and responsive navigation, adeptly avoiding static and dynamic obstacles.

Practical assessments highlighted the potential of wheeled-legged robots for achieving efficient and robust autonomy in real-world scenarios.

The claims that additional comparative studies also affirm the superiority of its tightly integrated navigation controller over traditional systems.

Advanced autonomous mobility

The autonomous navigation system for wheeled-legged robots integrates multiple payloads for localization, terrain mapping, and safety, including LIDAR sensors, a stereo camera, a delivery box, a 5G router, and a GPS antenna.

The stereo camera, equipped with high-frequency object detection, enables real-time tracking of people within a 20-meter range, enhancing safety.

To address dynamic obstacles, the team buffers the elevation map around detected human positions.

The navigation system employs a hierarchical approach, extracting waypoints from a global navigation path. It also uses a pure-pursuit-inspired algorithm for waypoint tracking.

The low-level controller (LLC), commanded by a high-level controller (HLC), guides the robot along intermediate waypoints.

Both controllers are neural networks trained via reinforcement learning (RL), with the HLC representing a novel contribution. This helps to integrate local navigation planning and path-following control.

The locomotion controller (LLC), based on recurrent neural networks (RNNs), autonomously selects gaits and transitions between walking and driving modes. It is trained using privileged learning in simulation environments.

The team’s training environment, inspired by navigation graphs from computer games, provides diverse challenges such as detours, dynamic obstacles, and rough terrains.

Kilometer-scale autonomous deployments in the urban environments of Zurich and Seville demonstrate the system’s capability, covering (5.15 miles or 8.29 kilometers) in Zurich with minimal human intervention.

A handheld laser scanner captures dense point clouds of the area, which are georeferenced and converted into a mesh representation for creating a navigation graph.

The robot localizes itself using onboard sensors and navigates toward goal points autonomously, with paths converted into robot-relative coordinates for navigation policy guidance.

Researchers claim that multiple long-distance experiments validate the system’s robustness. The experiments required navigation through diverse obstacles to reach distant goal points manually selected to maximize coverage.

The system showcases advanced autonomy and adaptability in complex urban environments, offering promising applications in various real-world scenarios.

The details of the team’s research were published in the journal Science Roboticson April 24.